Introduction

The digital landscape is undergoing a profound transformation.

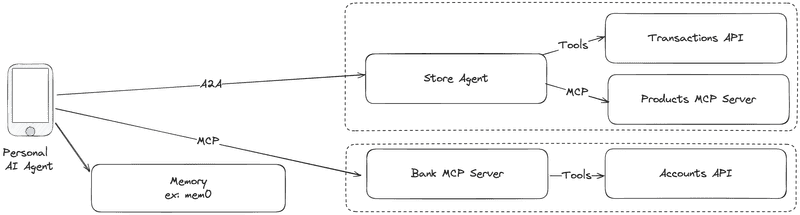

With the emergence of generative AI in our daily lives—and AI companies working on new devices that may soon sit alongside our smartphones, we're approaching a paradigm shift in how consumers interact with digital services.

It has become crucial to find new ways to expose business services for this next-generation web where interaction will be no more traditional clicking and forms-filling.

In the near future, consumers won't need to visit your website to book a flight or order a pizza. Instead, their personal AI agents—deeply familiar with their preferences and personal data—will handle such tasks through natural language and voice commands.

In this article, we'll explore some emerging protocols like MCP (Model Context Protocol) and A2A (Agent-to-Agent) that enable businesses to expose their services to these personal agents, ensuring they remains relevant and accessible in the AI-first economy.

The Shift from UI to Intent

While AI agents can browse the web using tools like "browser_use" or generate code to invoke APIs, these methods are far from ideal for businesses aiming to integrate seamlessly with the web of the future.

Consumers expect fast, frictionless experiences. Asking an agent to book a hotel via a browser can take minutes—assuming it doesn’t get stuck navigating complex interfaces or misinterpreting what to click. Similarly, relying on agents to generate and execute API calls isn't always reliable, especially when faced with complex or nested request bodies.

To address these challenges, the community has started developing new standards that allow AI agents to interface with services more reliably and efficiently. Two of the most prominent initiatives are MCP (Model Context Protocol) from Anthropic and A2A (Agent-to-Agent) from Google.

Model Context Protocol (MCP)

The MCP is a protocol that enables AI agents to connect to external data sources and execute tools in a reliable and structured way. It uses the JSON-RPC 2.0 messaging format.

The protocol has gained rapid adoption, with companies like Shopify, PayPal, and Atlassian already implementing MCP servers to expose their services to AI agents.

MCP servers offer four categories of features to clients:

- Tools: These are executable functions made available to agents. Similar to agent tools defined locally, but in this case, the execution happens on your MCP server.

- Prompts: These are templated messages designed to guide user input. Think of them as optimized, reusable prompts that help agents interact effectively with your tools.

- Resources: These provide static or dynamic data for AI models to consume—such as inventory lists, product information, or documentation.

- Sampling: This allows your server to request the generation of a message using the client’s LLM.

Creating MCP Server

An MCP server acts as a gateway between AI agents and your business services. It allows you to expose your APIs ( or Databases, Files etc.. ) in a standardized format, enabling any MCP-compatible agent to understand and interact with them seamlessly.

Let’s create a simple MCP server using Python MCP SDK that allows AI agents to search for products, explore categories, and assist in purchases.

api_base_url = "https://api.escuelajs.co/api"

client = httpx.AsyncClient()

mcp = FastMCP("my-store-mcp-server")

@mcp.tool()

async def search_products(query: str) -> list[dict[str, Any]]:

"""Search for products in the catalog"""

params = {"title": query}

response = await client.get(

f"{api_base_url}/v1/products",

params=params,

)

return response.json()

@mcp.resource(uri="resources://categories")

async def product_catalog() -> list[dict[str, Any]]:

"""Fetch all available categories"""

response = await client.get(f"{api_base_url}/v1/categories")

return response.json()

@mcp.prompt()

def assist_purchase(query: str) -> str:

"""Optimized prompt for purchase assistance"""

return f"""

1. First, understand user needs by asking clarifying questions

2. Search for relevant products using the search_products tool

3. Present options with key details (price, features, ratings)

4. Help them make an informed decision

5. When ready, create the purchase order with their details

Always prioritize customer satisfaction and provide honest recommendations.

The user has asked for: {query}.

"""

if __name__ == "__main__":

mcp.run(transport="sse")Creating MCP Client

An MCP client allows your AI agents to interact with MCP servers. Most modern agent frameworks already include support for MCP.

We won’t go into the setup of a memory-enabled agent here—that topic will be covered in a future post. For now, we’ll use a simple ReAct agent implemented with LangGraph.

# Register MCP servers the agent will connect to

client = MultiServerMCPClient(

{

"favorite_store": {

"url": "http://localhost:8000/sse",

"transport": "sse",

},

}

)

async def main():

# Discover tools exposed by the MCP server

tools = await client.get_tools()

agent = create_react_agent(

prompt="You are a helpful personal assistant.",

model=ChatBedrockConverse(model="eu.anthropic.claude-3-7-sonnet-20250219-v1:0"),

tools=tools,

)

data = await agent.ainvoke(

{"messages": [HumanMessage(content="I want to buy a new wood dining table")]}

)

for msg in data["messages"]:

if isinstance(msg, HumanMessage):

print(f"[Human]: {msg.content}\n")

elif isinstance(msg, AIMessage):

if isinstance(msg.content, list):

for part in msg.content:

if part["type"] == "text":

print(f"[AI]: {part['text']}\n")

elif part["type"] == "tool_use":

print(

f"[Tool Call]: {part['name']} with input {part['input']}\n"

)

else:

print(f"[AI]: {msg.content}\n")

if __name__ == "__main__":

asyncio.run(main())When you run the script, the agent will call the search_products tool on the MCP server to look up a product.

[Human]: I want to buy a new wood dining table

[AI]: I'd be happy to help you find a wood dining table. Let me search for some options in our catalog.

[Tool Call]: search_products with input {'query': 'wood dining table'}

[AI]: I've found a beautiful option for you:

**Elegant Solid Wood Dining Table** - $67

**Description:**

This sleek, contemporary dining table is crafted from high-quality solid wood with a warm finish.

It features a sturdy construction with a minimalist design that would add a touch of elegance to any home.

The table comfortably accommodates up to six guests and even includes a striking fruit bowl centerpiece.

**Details:**

- Category: Furniture

- Material: Solid wood

- Style: Contemporary, minimalist

- Seating capacity: Up to 6 people

Would you like more information about this table, or would you prefer to see additional wood dining table options?But what if your business services are more complex—and relying on the customer’s personal agent to orchestrate tool usage isn’t sufficient? That’s where the A2A protocol comes in.

Agent-to-Agent (A2A)

The Agent-to-Agent (A2A) protocol is the first framework designed to enable AI agents to collaborate seamlessly across diverse frameworks and cloud environments. It represents a fundamental shift toward interoperable, multi-agent systems. It solves the challenge of allowing agents—built using different frameworks and deployed on various clouds—to autonomously work together.

Like MCP, A2A is based on the JSON-RPC 2.0 messaging format.

Creating an A2A Server

A2A defines a public discovery endpoint at /.well-known/agent.json, which hosts the agent card. This card includes public metadata describing your agent's capabilities, skills, endpoint URL, and authentication requirements.

Let’s start by creating a simple Sales Agent with LangGraph:

@tool

async def search_products(query: str) -> list[dict[str, Any]]:

"""Search for products in the catalog"""

async with httpx.AsyncClient() as client:

response = await client.get(

"https://api.escuelajs.co/api/v1/products", params={"title": query}

)

return response.json()

class SalesAgent:

def __init__(self):

self.agent = self.create_agent()

def create_agent(self):

return create_react_agent(

prompt="""You are a helpful sales assistant.

1. Analyze customer needs

2. Search products using search_products tool

3. Present options with price and features

4. Help make informed decisions

5. Create purchase orders when ready

""",

model=ChatBedrockConverse(

model="eu.anthropic.claude-3-7-sonnet-20250219-v1:0"

),

tools=[search_products],

debug=True,

)

async def invoke(self, query: str):

response = await self.agent.ainvoke({"messages": [HumanMessage(content=query)]})

return str(response["messages"][-1].content)When building an A2A agent, you need to define its skills:

skill = AgentSkill(

id="search_product",

name="search_product",

description="Search a product",

tags=["products", "sales"],

)Then, you define the agent card, which will be served at /.well-known/agent.json for discovery:

agent_card = AgentCard(

name="Sales Agent",

description="A Sales agent that helps customers find and buy products",

url="http://localhost:9999/",

version="1.0.0",

defaultInputModes=["text"],

defaultOutputModes=["text"],

capabilities=AgentCapabilities(streaming=True),

skills=[skill],

)Next, you create an agent executor, which handles incoming A2A requests and generates responses:

class SalesAgentExecutor(AgentExecutor):

def __init__(self):

self.agent = SalesAgent()

async def execute(self, context: RequestContext, event_queue: EventQueue):

"""Handle A2A requests"""

query = context.get_user_input()

result = await self.agent.invoke(query)

await event_queue.enqueue_event(new_agent_text_message(result))

async def cancel(self, context: RequestContext, event_queue: EventQueue):

"""Handle cancellation (not implemented)"""

raise Exception("Not implemented")The last step is to expose and run your minimal A2A server through a FastAPI app:

server = A2AFastAPIApplication(

agent_card=agent_card,

http_handler=DefaultRequestHandler(

agent_executor=SalesAgentExecutor(),

task_store=InMemoryTaskStore()

),

)

if __name__ == "__main__":

uvicorn.run(server.build(), host="localhost", port=9999)Creating an A2A Client

An A2A client allows your agent to send structured messages to another AI agent over the A2A protocol. The first step is to discover the available agents and their skills:

async def discover_agents(agent_urls):

"""Discover available A2A agents and their skills"""

agent_cards = {}

agent_descriptions = []

for url in agent_urls:

async with httpx.AsyncClient() as client:

# Get agent information

resolver = A2ACardResolver(httpx_client=client, base_url=url)

card = await resolver.get_agent_card()

# Store card for later use

agent_cards[card.name] = card

# Format agent capabilities for our prompt

skills = format_agent_skills(card.skills)

agent_descriptions.append(

f"- **{card.name}**: {card.description}\n Skills: {skills}"

)

return agent_cards, agent_descriptions

def format_agent_skills(skills: list[AgentSkill]):

return "\n ".join(f"- {skill.name}: {skill.description}" for skill in skills)Next, we need a handoff tool for the personal agent that can send messages to business agents via A2A Protocol:

async def create_handoff_tool(agent_cards: dict[str, AgentCard]):

@tool

async def handoff_to_agent(agent_name: str, user_request: str) -> Dict[str, Any]:

"""

Hand off a task to a partner A2A agent

Args:

agent_name: Name of the agent to contact

user_request: The user's original request

"""

# Find the requested agent

card = agent_cards.get(agent_name)

if not card:

return {"status": "error", "message": f"Agent '{agent_name}' not found"}

async with httpx.AsyncClient(timeout=30) as client:

a2a_client = A2AClient(client, card)

# Create the message request

request = SendMessageRequest(

id=str(uuid4()),

params=MessageSendParams(

message=Message(

role=Role.user,

parts=[Part(root=TextPart(text=user_request))],

messageId=uuid4().hex,

)

),

)

# Send message to agent

response = await a2a_client.send_message(request)

if isinstance(response.root, SendMessageSuccessResponse):

# Extract text from response

response_text = extract_response_text(response.root)

return {"status": "success", "response": response_text}

return {"status": "error", "message": "Technical Error"}

return handoff_to_agent

def extract_response_text(response: SendMessageSuccessResponse):

return "".join(

part.root.text

for part in response.result.parts

if part.root.kind == "text"

)

Now we'll create our personal assistant and inject the discovered agents:

async def create_personal_assistant(agent_urls):

"""Create a personal assistant with A2A capabilities"""

# Discover available agents

agent_cards, agent_descriptions = await discover_agents(agent_urls)

# Create handoff tool

handoff_tool = await create_handoff_tool(agent_cards)

# Create system prompt

system_prompt = build_system_prompt(agent_descriptions)

# Create the agent

assistant = create_react_agent(

prompt=system_prompt,

model=ChatBedrockConverse(model="eu.anthropic.claude-3-7-sonnet-20250219-v1:0"),

tools=[handoff_tool],

debug=True,

)

return assistant

def build_system_prompt(agent_descriptions):

agents_list = "\n".join(agent_descriptions)

return f"""

You are a helpful personal assistant with access to a network of partners AI agents.

Available agents:

{"\n - ".join(agents_list)}

When handling user requests:

1. Assess if the request requires specialized knowledge that one of the available agents can provide

2. If specialized help is needed, use handoff_to_agent with the appropriate agent details

3. If no specialized agent is needed, handle the request directly with your knowledge

Choose the most relevant agent based on their description and skills.

"""Finally, we launch our agent:

async def main():

"""Main function demonstrating the A2A client usage"""

agent_urls = ["http://localhost:9999/"]

assistant = await create_personal_assistant(agent_urls)

user_question = "I want to buy a macbook for work"

data = await assistant.ainvoke({"messages": [HumanMessage(content=user_question)]})

for msg in data["messages"]:

if isinstance(msg, HumanMessage):

print(f"[Human]: {msg.content}\n")

elif isinstance(msg, AIMessage):

if isinstance(msg.content, list):

for part in msg.content:

if part["type"] == "text":

print(f"[AI]: {part['text']}\n")

elif part["type"] == "tool_use":

print(

f"[Tool Call]: {part['name']} with input {part['input']}\n"

)

else:

print(f"[AI]: {msg.content}\n")

if __name__ == "__main__":

asyncio.run(main())When you run the script, you'll see the agent sending the query to the sales agent

[Human]: I want to buy a macbook for work

[AI]: I'd be happy to help you purchase a MacBook for work. Since this involves finding and purchasing a specific product, I'll connect you with our Sales Agent who specializes in helping customers buy products.

[Tool Call]: handoff_to_agent with input {'agent_name': 'Sales Agent', 'user_request': 'I want to buy a macbook for work'}

[AI]: Great news! Our Sales Agent has suggested the following MacBook option for your work needs:

## Apple MacBook M2 Pro

- **Price**: $1,000.00

- **Features**: Powered by the Apple M2 Pro chip, offering excellent performance for professional workloads

- **Details**: Well-suited for various work applications including design, development, business tasks, and content creation

This model offers several advantages for professional work:

- Powerful performance with the M2 Pro chip

- Great battery life for work on the go

- High-quality display for detailed work

- macOS operating system with business and productivity applications

Would you like more specific information about this MacBook model, such as technical specifications? Or would you like to proceed with purchasing the Apple MacBook M2 Pro? I can help you with either option.MCP vs A2A

A2A is not an alternative to MCP—these two protocols operate at different levels of abstraction and can be used together.

- MCP is designed for exposing tools, prompts, and data resources to AI agents.

- A2A, on the other hand, is about exposing full agents that can perform autonomous reasoning and actions over multiple turns.

A2A is particularly well-suited for long-running, multi-step tasks that require coordination beyond what a single tool can provide.

Technical Challenges

As we move toward an AI-first web, several technical challenges emerge:

- Scalability: Agent systems frequently handle long-running requests, multi-turn conversations, and tool invocations, all of which make scaling under high load challenging.

- Security: Automated agent-to-agent interactions introduce new risks such as prompt injection, unauthorized access to tools, and cross-agent data leakage.

- Discoverability: A key open question is how personal agents will find the right services?, will we need a centralized directory or kind of “Google for agents” where businesses can register their MCP servers and A2A agents?

Business Challenges

As services become accessible through AI agents rather than traditional UIs, businesses face several unique challenges:

- Pricing for Agent-Mediated Transactions: Businesses will need to rethink monetization strategies to cover costs of transactions initiated by their agents. Agent interactions can generate a large numbers of input and output tokens.

- Legal and Regulatory Compliance: When agents act on behalf of users—making purchases, signing up for services, or sharing sensitive data—businesses must ensure that these actions meet privacy laws, terms of service, and jurisdictional regulations. Liability and consent mechanisms must be re-evaluated.

- Discoverability and Differentiation: Without visual branding or user interfaces, how do users choose your service over a competitor’s? Businesses will need to invest in metadata, service quality, and agent partnerships to remain visible and preferred in agent ecosystems.

Conclusion

The transition to an AI-agent-driven web will become a necessity for businesses. By adopting protocols like MCP and A2A, companies can ensure their services remain discoverable, accessible, and usable in a world